SCIENTIFIC METHOD

|

| The medical approach is often represented as an ongoing process. This diagram represents one variation, and there are numerous others. |

The scientific method is an empirical technique of obtaining information that has characterised the development of technological know-how seeing that as a minimum the seventeenth century (with outstanding practitioners in preceding centuries). It entails careful remark, making use of rigorous skepticism approximately what's found, for the reason that cognitive assumptions can distort how one interprets the statement. It involves formulating hypotheses, through induction, based on such observations; experimental and measurement-based testing of deductions drawn from the hypotheses; and refinement (or removal) of the hypotheses based totally on the experimental findings. These are ideas of the scientific method, as prominent from a definitive series of steps applicable to all scientific organisations.

Although processes range from one field of inquiry to another, the underlying method is often the same from one area to any other. The procedure within the clinical technique involves making conjectures (hypothetical reasons), deriving predictions from the hypotheses as logical effects, and then carrying out experiments or empirical observations primarily based on those predictions. A speculation is a conjecture, based on know-how received whilst in search of solutions to the query. The hypothesis might be very precise, or it is probably extensive. Scientists then test hypotheses by way of engaging in experiments or studies. A scientific speculation must be falsifiable, implying that it's far viable to discover a probable final results of an test or statement that conflicts with predictions deduced from the speculation; in any other case, the speculation can not be meaningfully tested.

The motive of an test is to decide whethers observation trust or war with the expectancies deduced from a speculation.: Book I, pp.372, 408 Experiments can take vicinity anywhere from a storage to CERN's Large Hadron Collider. There are problems in a formulaic assertion of technique, but. Though the clinical technique is frequently offered as a set series of steps, it represents as an alternative a hard and fast of preferred standards. Not all steps take region in each scientific inquiry (nor to the same diploma), and they are not continually within the identical order.

History|

Important debates within the records of technology subject skepticism that whatever can be recognised for sure (which include views of Francisco Sanches), rationalism (specially as endorsed via René Descartes), inductivism, empiricism (as argued for by means of Francis Beaverbrook, then rising to particular prominence with Isaac Newton and his followers), and hypothetico-deductivism, which got here to the fore within the early nineteenth century.

The time period "scientific technique" emerged within the 19th century, when a sizable institutional improvement of science become taking area and terminologies setting up clean limitations among science and non-technological know-how, which includes "scientist" and "pseudoscience", appeared. Throughout the 1830s and 1850s, at which period Baconianism become popular, naturalists like William Whewell, John Herschel, John Stuart Mill engaged in debates over "induction" and "records" and were focused on the way to generate know-how. In the overdue nineteenth and early twentieth centuries, a debate over realism vs. Antirealism become conducted as powerful scientific theories extended past the realm of the observable.

Problem-Solving Via Scientific method

|

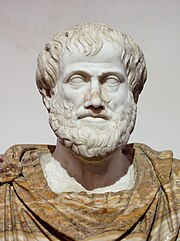

| Aristotle (384–322 BCE). "As regards his technique, Aristotle is diagnosed as the inventor of clinical approach due to his refined analysis of logical implications contained in demonstrative discourse, which goes well beyond herbal good judgment and does not owe something to the ones who philosophized before him." – Riccardo Pozzo |

The time period "clinical approach" got here into famous use in the 20th century; Dewey's 1910 ebook, How We Think, stimulated popular recommendations, doping up in dictionaries and technology textbooks, even though there was little consensus over its which means. Although there has been growth via the center of the 20th century, via the 1960s and Nineteen Seventies numerous influential philosophers of science including Thomas Kuhn and Paul Feyerabend had questioned the universality of the "scientific approach" and in doing so largely changed the notion of technology as a homogeneous and general method with that of it being a heterogeneous and local practice. In precise, Paul Feyerabend, inside the 1975 first version of his e-book Against Method, argued in opposition to there being any standard guidelines of technological know-how; Popper 1963, Gauch 2003, and Tow 2010 disagree with Feyerabend's declare; hassle solvers, and researchers are to be prudent with their sources in the course of their inquiry.

Later stances encompass physicist Lee Smolin's 2013 essay "There Is No Scientific Method",wherein he espouses moral principles, and historian of science Daniel Thurs's bankruptcy in the 2015 e-book Newton's Apple and Other Myths approximately Science, which concluded that the clinical approach is a delusion or, at nice, an idealization. As myths are beliefs, they're issue to the narrative fallacy as Taleb factors out. Philosophers Robert Nola and Howard Sankey, in their 2007 book Theories of Scientific Method, said that debates over scientific method continue, and argued that Feyerabend, regardless of the name of Against Method, frequent sure policies of approach and attempted to justify those rules with a meta technique. Staddon argues it's miles a mistake to try following rules inside the absence of an algorithmic medical method; if so, "science is quality understood through examples". But algorithmic methods, which include disproof of existing theory with the aid of test had been used considering Alhacen Book of Optics, and Galileo Two New Sciences, and The Assayer still stand as scientific approach. They contradict Feyerabend's stance.

|

| Johannes Kepler (1571–1630). "Kepler suggests his keen logical feel in detailing the entire procedure by way of which he eventually arrived on the proper orbit. This is the finest piece of Retroductive reasoning ever accomplished." – C. S. Peirce, c. 1896, on Kepler's reasoning via explanatory hypotheses |

The ubiquitous element inside the medical approach is empiricism. This is in opposition to stringent sorts of rationalism: the scientific method embodies the placement that motive alone can not solve a specific medical hassle. A robust formulation of the clinical technique isn't always always aligned with a form of empiricism in which the empirical records is recommend within the form of enjoy or different abstracted styles of understanding; in cutting-edge clinical practice, however, the use of scientific modelling and reliance on summary typologies and theories is commonly regularly occurring. The clinical approach counters claims that revelation, political or non secular dogma, appeals to lifestyle, typically held beliefs, common experience, or presently held theories pose the handiest viable means of demonstrating truth.

|

| Galileo Galilei (1564–1642). According to Albert Einstein, "All knowledge of fact starts from experience and ends in it. Propositions arrived at through only logical manner are absolutely empty as regards reality. Because Galileo saw this, and mainly due to the fact he drummed it into the clinical world, he's the daddy of contemporary physics – indeed, of modern-day science altogether." |

Different early expressions of empiricism and the clinical technique may be determined for the duration of history, as an instance with the historic Stoics, Epicurus, Alhazen, Avicenna, Roger Viscount St. Albans, and William of Ockham. From the sixteenth century onwards, experiments had been endorsed with the aid of Francis 1st Baron Verulam, and finished with the aid of Giambattista della Porta, Johannes Kepler, and Galileo Galilei. There was unique development aided with the aid of theoretical works by using Francisco Sanches, John Locke, George Berkeley, and David Hume.

A sea voyage from America to Europe afforded C. S. Peirce the gap to make clear his ideas, step by step ensuing in the hypothetico-deductive version. Formulated inside the twentieth century, the version has undergone significant revision on account that first proposed (for a extra formal dialogue, see § Elements of the medical approach)

Overview

The scientific approach is the procedure by way of which science know-how is completed. As in other regions of inquiry, technology (thru the medical approach) can construct on preceding expertise and expand a greater sophisticated know-how of its subjects of take a look at over the years.This version can be visible to underlie the clinical revolution.

Process

The overall technique involves making conjectures (hypotheses), deriving predictions from them as logical effects, and then wearing out experiments based totally on those predictions to determine whether or not the original conjecture become accurate.There are problems in a formulaic announcement of technique, but. Though the medical approach is often provided as a hard and fast sequence of steps, those movements are higher considered as popular principles.Not all steps take location in each medical inquiry (nor to the identical diploma), and they're no longer usually done inside the identical order. As noted through scientist and truth seeker William Whewell , "invention, sagacity, genius" are required at each step.

Formulation of a question

The question can refer to the reason of a selected statement, as in "Why is the sky blue?" but also can be open-ended, as in "How can I design a drug to therapy this particular ailment?" This stage regularly entails locating and evaluating evidence from previous experiments, non-public medical observations or assertions, in addition to the paintings of different scientists. If the answer is already recognized, a special question that builds at the evidence can be posed. When applying the scientific method to analyze, figuring out a terrific question can be very tough and it'll affect the final results of the research.

Hypothesis

A hypothesis is a conjecture, primarily based on know-how obtained even as formulating the question, which could provide an explanation for any given conduct. The hypothesis might be very precise; as an example, Einstein's equivalence precept or Francis Crick's "DNA makes RNA makes protein", or it might be broad; as an instance, "unknown species of life dwell inside the unexplored depths of the oceans". See § Hypothesis improvement

A statistical speculation is a conjecture about a given statistical population. For instance, the population might be human beings with a particular disorder. One conjecture is probably that a brand new drug will treatment the sickness in some of the humans in that population, as in a scientific trial of the drug. A null speculation would conjecture that the statistical speculation is fake; for instance, that the new drug does not anything, and that any cure in the populace might be caused by danger (a random variable).

An opportunity to the null speculation, to be falsifiable, should say that a treatment application with the drug does better than danger. To check the announcement a remedy software with the drug does better than threat, an experiment is designed in which a portion of the population (the control organization), is to be left untreated, while some other, separate part of the populace is to be handled. t-Tests could then specify how massive the dealt with agencies, and the way huge the manipulate agencies are to be, so as to infer whether some path of treatment of the population has led to a therapy of a number of them, in every of the agencies. The businesses are tested, in flip by the researchers, in a protocol.

Strong inference should rather advise multiple opportunity hypotheses embodied in randomized managed trials, treatments A, B, C, ... , (say in a blinded experiment with various dosages, or with life-style modifications, and so on) so as now not to introduce affirmation bias in desire of a particular route of treatment.Ethical issues will be used, to minimize the numbers in the untreated groups, e.G., use nearly every remedy in each institution, but apart from A, B, C, ..., respectively as controls.

Prediction

The prediction step deduces the logical consequences of the hypothesis earlier than the final results is thought. These predictions are expectations for the effects of trying out. If the end result is already acknowledged, it's far evidence that is ready to be taken into consideration in recognition or rejection of the hypothesis. The proof is also stronger if the actual result of the predictive check is not already acknowledged, as tampering with the take a look at can be dominated out, as can hindsight bias (see postdiction). Ideally, the prediction ought to additionally distinguish the hypothesis from probable options; if two hypotheses make the identical prediction, looking at the prediction to be accurate isn't evidence for either one over the opposite. (These statements about the relative strength of evidence may be mathematically derived using Bayes' Theorem).

The effect, consequently, is to be said at the identical time or briefly after the statement of the speculation, however before the experimental result is known.

Likewise, the take a look at protocol is to be said before execution of the take a look at. These necessities come to be precautions towards tampering, and useful resource the reproducibility of the experiment.

Testing

Suitable checks of a hypothesis evaluate the anticipated values from the exams of that speculation with the actual consequences of these checks. Scientists (and different people) can then secure, or discard, their hypotheses by means of carrying out suitable experiments.

Analysis

An analysis determines, from the outcomes of the test, the following movements to take. The expected values from the test of the opportunity speculation are compared to the expected values as a consequence of the null hypothesis (that is, a prediction of no difference within the status quo). The distinction between expected versus actual indicates which hypothesis higher explains the resulting records from the test. In cases where an experiment is repeated commonly, a statistical evaluation inclusive of a chi-squared check whether or not the null hypothesis is proper, can be required.

Evidence from different scientists, and from enjoy are to be had for incorporation at any degree in the method. Depending on the complexity of the experiment, generation of the procedure may be required to acquire enough evidence to answer the query with confidence, or to build up different answers to quite precise questions, to answer a single broader question.

When the proof has falsified the opportunity hypothesis, a new speculation is needed; if the proof does no longer conclusively justify discarding the opportunity hypothesis, other predictions from the alternative hypothesis might be considered. Pragmatic concerns, inclusive of the sources available to maintain inquiry, would possibly manual the research's further path. When evidence for a speculation strongly supports that speculation, similarly questioning can observe, for perception into the broader inquiry beneath investigation.

DNA example

The primary elements of the medical approach are illustrated by using the subsequent instance (which passed off from 1944 to 1953) from the invention of the structure of DNA:

* Question: Previous investigation of DNA had determined its chemical composition (the four nucleotides), the structure of each man or woman nucleotide, and different houses. DNA had been recognized as the service of genetic information through the Avery–MacLeod–McCarty test in 1944, however the mechanism of how genetic data changed into stored in DNA turned into unclear.

* Hypothesis: Linus Pauling, Francis Crick and James D. Watson hypothesized that DNA had a helical shape.

* Prediction: If DNA had a helical structure, its X-ray diffraction pattern might be X-formed. This prediction was decided using the arithmetic of the helix rework, which have been derived via Cochran, Crick, and Vand (and independently by Stokes). This prediction was a mathematical construct, absolutely independent from the organic trouble handy.

* Experiment: Rosalind Franklin used natural DNA to perform X-ray diffraction to produce picture 51. The outcomes showed an X-shape.

* Analysis: When Watson noticed the detailed diffraction sample, he right away diagnosed it as a helix. He and Crick then produced their model, the usage of this facts together with the previously known information about DNA's composition, mainly Chargaff's rules of base pairing.

* The discovery became the starting point for many similarly research regarding the genetic fabric, along with the sphere of molecular genetics, and it became provided the Nobel Prize in 1962. Each step of the instance is tested in extra element later in the article.

Other components

The clinical approach additionally consists of different additives required even if all of the iterations of the stairs above had been completed:

Replication

If an experiment cannot be repeated to supply the same outcomes, this implies that the unique outcomes could have been in error. As a result, it is common for a single experiment to be carried out a couple of times, in particular whilst there are uncontrolled variables or different indicators of experimental error. For considerable or sudden effects, other scientists can also attempt to reflect the results for themselves, specially if the ones results would be important to their own paintings. Replication has end up a contentious issue in social and biomedical technology wherein remedies are administered to organizations of individuals. Typically an experimental institution gets the treatment, which include a drug, and the manage organization receives a placebo. John Ioannidis in 2005 talked about that the technique being used has caused many findings that cannot be replicated.

External review

The procedure of peer review involves assessment of the experiment through professionals, who usually give their reviews anonymously. Some journals request that the experimenter provide lists of possible peer reviewers, in particular if the sphere is highly specialised. Peer evaluation does not certify the correctness of the effects, most effective that, in the opinion of the reviewer, the experiments themselves have been sound (based on the description supplied via the experimenter). If the work passes peer evaluation, which occasionally may additionally require new experiments requested with the aid of the reviewers, it'll be published in a peer-reviewed scientific journal. The precise journal that publishes the effects indicates the perceived excellent of the paintings.

Data recording and sharing

Scientists typically are careful in recording their statistics, a requirement promoted by using Ludwik Fleck (1896–1961) and others. Though now not normally required, they is probably asked to deliver this records to other scientists who wish to replicate their original results (or components of their unique outcomes), extending to the sharing of any experimental samples that may be hard to obtain. See §Communication and network.

Instrumentation

Institutional researchers would possibly collect an tool to institutionalize their checks. These devices would make use of observations of the real international, which may agree with, or perhaps conflict with, their predictions deduced from their speculation. These establishments thereby lessen the studies function to a price/advantage, that's expressed as cash, and the time and interest of the researchers to be expended, in trade for a report to their materials.

Current massive devices, inclusive of CERN's Large Hadron Collider (LHC),[69] or LIGO, or the National Ignition Facility (NIF), or the International Space Station (ISS), or the James Webb Space Telescope (JWST), entail predicted fees of billions of bucks, and timeframes extending over a long time. These sorts of establishments affect public coverage, on a national or maybe international foundation, and the researchers could require shared get entry to to such machines and their adjunct infrastructure.

WRITTEN BY : ADRISH WAHEED

Labels: SCIENTIFIC METHOD