ARTIFICIAL INTELLIGENCE TECHNOLOGY

Artificial intelligence (AI) is intelligence confirmed via machines, in preference to natural intelligence displayed with the aid of animals consisting of humans. Leading AI textbooks outline the sphere as the observe of "shrewd marketers": any device that perceives its environment and takes moves that maximize its chance of attaining its dreams. Some popular debts use the term "artificial intelligence" to explain machines that mimic "cognitive" features that people partner with the human thoughts, along with "mastering" and "trouble solving", but, this definition is rejected by essential AI researchers.

AI applications consist of superior web serps (e.G., Google), recommendation structures (utilized by YouTube, Amazon and Netflix), expertise human speech (which include Siri and Alexa), self-using automobiles (e.G., Tesla), automated choice-making and competing at the best level in strategic recreation systems (consisting of chess and Go). As machines come to be more and more capable, tasks considered to require "intelligence" are frequently removed from the definition of AI, a phenomenon referred to as the AI impact. For instance, optical person recognition is often excluded from things considered to be AI, having emerge as a routine generation.

Artificial intelligence turned into founded as an educational field in 1956, and within the years since has skilled several waves of optimism, followed by way of sadness and the loss of funding (referred to as an "AI iciness"), followed through new approaches, fulfillment and renewed funding. AI studies has attempted and discarded many distinct tactics considering the fact that its founding, such as simulating the brain, modeling human problem fixing, formal logic, huge databases of know-how and imitating animal conduct. In the primary decades of the twenty first century, highly mathematical statistical gadget learning has dominated the sphere, and this technique has proved noticeably a success, assisting to solve many challenging problems for the duration of enterprise and academia.

The various sub-fields of AI research are focused around specific goals and using particular tools. The conventional dreams of AI research encompass reasoning, know-how illustration, planning, studying, natural language processing, perception, and the capacity to move and manage gadgets. General intelligence (the ability to resolve an arbitrary problem) is the various field's lengthy-term goals. To remedy these troubles, AI researchers have adapted and incorporated a wide variety of trouble-solving strategies—such as search and mathematical optimization, formal common sense, artificial neural networks, and methods primarily based on facts, possibility and economics. AI also draws upon laptop technological know-how, psychology, linguistics, philosophy, and many different fields.

The discipline was founded on the belief that human intelligence "can be so exactly described that a machine can be made to simulate it". This raises philosophical arguments about the thoughts and the ethics of making artificial beings endowed with human-like intelligence. These issues have been explored by means of fantasy, fiction, and philosophy considering that antiquity. Science fiction and futurology have additionally suggested that, with its large ability and power, AI may additionally turn out to be an existential risk to humanity.

HISTORY

|

| Silver didrachma from Crete depicting Talos, an historical legendary automaton with artificial intelligence |

Artificial beings with intelligence seemed as storytelling gadgets in antiquity, and were commonplace in fiction, as in Mary Shelley's Frankenstein or Karel Čapek's R.U.R. These characters and their fates raised the various equal problems now discussed in the ethics of artificial intelligence.

The have a look at of mechanical or "formal" reasoning began with philosophers and mathematicians in antiquity. The look at of mathematical logic led immediately to Alan Turing's theory of computation, which cautioned that a system, by shuffling symbols as easy as "0" and "1", may want to simulate any manageable act of mathematical deduction. This insight that digital computers can simulate any manner of formal reasoning is known as the Church–Turing thesis.

The Church-Turing thesis, at the side of concurrent discoveries in neurobiology, statistics principle and cybernetics, led researchers to consider the opportunity of constructing an electronic mind. The first work this is now commonly identified as AI changed into McCullouch and Pitts' 1943 formal design for Turing-whole "artificial neurons".

When get right of entry to to virtual computer systems became feasible in the mid-1950s, AI studies started to explore the opportunity that human intelligence can be decreased to step-via-step symbol manipulation, known as Symbolic AI or GOFAI. Approaches primarily based on cybernetics or synthetic neural networks had been abandoned or pushed into the heritage.

The subject of AI studies was born at a workshop at Dartmouth College in 1956. The attendees became the founders and leaders of AI studies. They and their students produced packages that the press described as "astounding": computers had been mastering checkers strategies, fixing phrase issues in algebra, proving logical theorems and talking English. By the center of the 1960, research in the U.S. Become closely funded by using the department of Defense and laboratories had been hooked up round the arena.

Researchers inside the 1960s and the Nineteen Seventies have been convinced that symbolic tactics would subsequently succeed in growing a gadget with artificial standard intelligence and considered this the goal in their subject. Herbert Simon expected, "machines could be capable, inside two decades, of doing any paintings a man can do". Marvin Minsky agreed, writing, "inside a generation ... The trouble of creating 'artificial intelligence' will considerably be solved".

They didn't apprehend the difficulty of a number of the last responsibilities. Progress slowed and in 1974, in reaction to the criticism of Sir James Lighthill and ongoing pressure from the USA Congress to fund extra effective tasks, each the U.S. And British governments reduce off exploratory studies in AI. The following couple of years could later be referred to as an "AI iciness", a period when acquiring investment for AI tasks became difficult.

In the early Eighties, AI studies changed into revived by using the industrial success of expert systems, a form of AI application that simulated the expertise and analytical abilities of human professionals. By 1985, the marketplace for AI had reached over a thousand million greenbacks. At the same time, Japan's 5th technology laptop task stimulated the U.S.And British governments to restore funding for academic studies. However, starting with the disintegrate of the Lisp Machine market in 1987, AI all over again fell into disrepute, and a 2d, longer-lasting iciness began.

Many researchers began to doubt that the symbolic technique could be able to imitate all the methods of human cognition, specifically notion, robotics, mastering and pattern recognition. A wide variety of researchers started out to inspect "sub-symbolic" strategies to precise AI issues. Robotics researchers, together with Rodney Brooks, rejected symbolic AI and focused at the basic engineering issues that would permit robots to transport, survive, and study their surroundings. Interest in neural networks and "connectionism" became revived by means of Geoffrey Hinton, David Rumelhart and others in the center of the 1980s. Soft computing equipment were evolved within the 80s, which include neural networks, fuzzy systems, Grey system principle, evolutionary computation and lots of gear drawn from facts or mathematical optimization.

AI regularly restored its popularity inside the late Nineties and early twenty first century through locating precise solutions to particular troubles. The slender consciousness allowed researchers to provide verifiable results, exploit more mathematical strategies, and collaborate with other fields (together with facts, economics and arithmetic). By 2000, answers evolved by means of AI researchers had been being extensively used, despite the fact that within the Nineteen Nineties they had been hardly ever described as "artificial intelligence".

Faster computers, algorithmic improvements, and get right of entry to to huge quantities of records enabled advances in gadget learning and perception; facts-hungry deep getting to know strategies began to dominate accuracy benchmarks around 2012. According to Bloomberg's Jack Clark, 2015 became a landmark yr for synthetic intelligence, with the wide variety of software program initiatives that use AI inside Google elevated from a "sporadic utilization" in 2012 to extra than 2,700 tasks. He attributes this to an boom in inexpensive neural networks, due to a rise in cloud computing infrastructure and to an growth in research equipment and datasets. In a 2017 survey, one in 5 businesses suggested they'd "included AI in some offerings or strategies". The amount of research into AI (measured by general publications) accelerated with the aid of 50% within the years 2015–2019.

Numerous instructional researchers have become involved that AI become no longer pursuing the original aim of creating flexible, fully smart machines. Much of current research entails statistical AI, that's overwhelmingly used to clear up specific troubles, even distinctly a success strategies which include deep mastering. This challenge has caused the subfield artificial general intelligence (or "AGI"), which had numerous properly-funded establishments by means of the 2010s.

GOALS

The trendy hassle of simulating (or growing) intelligence has been broken down into sub-troubles. These encompass unique traits or talents that researchers assume an wise machine to show. The trends defined beneath have acquired the most attention.

Reasoning , Problem solving

Early researchers developed algorithms that imitated step-by-step reasoning that human beings use once they clear up puzzles or make logical deductions. By the late 1980s and Nineties, AI research had advanced strategies for handling uncertain or incomplete facts, using standards from chance and economics.

Many of these algorithms proved to be insufficient for solving huge reasoning problems due to the fact they experienced a "combinatorial explosion": they have become exponentially slower because the issues grew large. Even people hardly ever use the step-with the aid of-step deduction that early AI studies ought to model. They remedy most in their issues using rapid, intuitive judgments.

Knowledge representation

|

| An ontology represents understanding as a hard and fast of principles inside a website and the relationships between those concepts. |

Knowledge representation and information engineering permit AI applications to reply questions intelligently and make deductions about actual world facts.

A representation of "what exists" is an ontology: the set of objects, members of the family, standards, and residences formally defined so that software program retailers can interpret them. The most standard ontologies are known as upper ontologies, which try to offer a basis for all other knowledge and act as mediators among area ontologies that cowl precise knowledge about a particular information area (area of interest or vicinity of problem). A surely sensible program could additionally want get admission to to commonsense know-how; the set of statistics that a mean person is aware of. The semantics of an ontology is commonly represented in an outline good judgment, inclusive of the Web Ontology Language.

AI studies has developed equipment to symbolize specific domains, together with: items, properties, classes and family members among objects; conditions, activities, states and time; causes and consequences; understanding about expertise (what we realize approximately what other humans recognize);. default reasoning (things that humans count on are real until they may be told otherwise and could remain authentic even when different statistics are changing); in addition to different domains. Among the maximum difficult problems in AI are: the breadth of common sense expertise (the quantity of atomic statistics that the common man or woman knows is extensive); and the sub-symbolic shape of most common sense understanding (a lot of what human beings realize isn't always represented as "facts" or "statements" that they may specific verbally).

Formal knowledge representations are used in content material-based totally indexing and retrieval scene interpretation, clinical decision assist, understanding discovery (mining "thrilling" and actionable inferences from huge databases), and different areas.

Planing

An intelligent agent that may plan makes a illustration of the country of the arena, makes predictions approximately how their movements will trade it and makes choices that maximize the application (or "fee") of the available picks. In classical planning troubles, the agent can count on that it's far the most effective gadget acting in the international, permitting the agent to be positive of the outcomes of its movements. However, if the agent isn't the only actor, then it requires that the agent purpose beneath uncertainty, and constantly re-assess its environment and adapt. Multi-agent planning uses the cooperation and opposition of many sellers to attain a given aim. Emergent behavior which includes this is used by evolutionary algorithms and swarm intelligence.

Learning

Machine learning (ML), a essential idea of AI studies since the subject's inception, is the have a look at of computer algorithms that improve routinely through revel in.

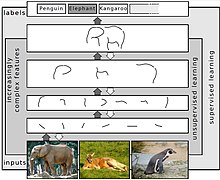

Unsupervised learning unearths patterns in a circulation of enter. Supervised getting to know calls for a human to label the input records first, and is derived in two primary sorts: category and numerical regression. Classification is used to determine what category some thing belongs in—the program sees a number of examples of things from several classes and could learn to classify new inputs. Regression is the try and produce a feature that describes the connection among inputs and outputs and predicts how the outputs must exchange as the inputs exchange. Both classifiers and regression newcomers may be considered as "function approximators" trying to study an unknown (probable implicit) function; as an example, a junk mail classifier may be regarded as mastering a feature that maps from the textual content of an electronic mail to one in every of two classes, "junk mail" or "no longer spam". In reinforcement studying the agent is rewarded for true responses and punished for bad ones. The agent classifies its responses to shape a approach for running in its problem space. Transfer studying is whilst information gained from one problem is carried out to a brand new hassle.

Computational learning concept can investigate newbies by using computational complexity, by way of pattern complexity (how a good deal information is required), or by different notions of optimization.

Natural language processing

|

| A parse tree represents the syntactic shape of a sentence in keeping with some formal grammar. |

Natural language processing (NLP) allows machines to read and apprehend human language. A sufficiently powerful natural language processing device might permit natural-language user interfaces and the acquisition of information at once from human-written assets, including newswire texts. Some honest applications of NLP include information retrieval, question answering and gadget translation.

Symbolic AI used formal syntax to translate the deep structure of sentences into good judgment. This did not produce beneficial packages, due to the intractability of common sense and the breadth of common-sense know-how. Modern statistical strategies include co-incidence frequencies (how regularly one phrase appears close to any other), "Keyword recognizing" (attempting to find a particular phrase to retrieve facts), transformer-based deep studying (which unearths patterns in text), and others. They have done desirable accuracy on the page or paragraph degree, and, by way of 2019, could generate coherent textual content.

Preception

|

| Feature detection (pictured: edge detection) helps AI compose informative abstract structures out of raw facts. |

Machine perception is the potential to use input from sensors (inclusive of cameras, microphones, wireless alerts, and lively lidar, sonar, radar, and tactile sensors) to deduce elements of the world. Applications encompass speech reputation, facial recognition, and item reputation.

Computer vision is the capability to analyze visual enter.

Motion and manipulation

AI is heavily utilized in robotics. Localization is how a robotic is aware of its place and maps its environment. When given a small, static, and visible surroundings, this is easy; but, dynamic environments, consisting of (in endoscopy) the indoors of a patient's breathing frame, pose a more mission.

Motion planning is the process of breaking down a motion task into "primitives" along with character joint moves. Such movement regularly involves compliant motion, a process in which movement calls for keeping physical contact with an item. Robots can examine from revel in a way to pass successfully despite the presence of friction and equipment slippage.

Social intelligence

|

| Kismet, a robotic with rudimentary social competencies |

Affective computing is an interdisciplinary umbrella that comprises systems which understand, interpret, technique, or simulate human feeling, emotion and mood. For example, some virtual assistants are programmed to speak conversationally or maybe to banter humorously; it makes them seem greater sensitive to the emotional dynamics of human interaction, or to otherwise facilitate human–computer interaction. However, this tends to present naïve users an unrealistic concept of the way wise existing computer retailers absolutely are.

Moderate successes associated with affective computing include textual sentiment evaluation and, greater currently, multimodal sentiment analysis), wherein AI classifies the impacts displayed with the aid of a videotaped difficulty.

General intelligence

A machine with widespread intelligence can remedy a extensive type of problems with a breadth and flexibility similar to human intelligence. There are several competing thoughts approximately a way to broaden synthetic fashionable intelligence. Hans Moravec and Marvin Minsky argue that paintings in extraordinary man or woman domain names can be included into a sophisticated multi-agent machine or cognitive structure with trendy intelligence. Pedro Domingos hopes that there's a conceptually honest, however mathematically tough, "master algorithm" that would lead to AGI. Others accept as true with that anthropomorphic features like an artificial brain or simulated toddler improvement will one day attain a crucial factor where standard intelligence emerges.

Tools

Search and optimization

Many troubles in AI may be solved theoretically by way of intelligently searching through many feasible answers: Reasoning may be reduced to appearing a search. For instance, logical proof can be regarded as looking for a route that leads from premises to conclusions, in which each step is the application of an inference rule. Planning algorithms seek via timber of dreams and subgoals, looking for a course to a goal goal, a technique known as method-ends evaluation. Robotics algorithms for transferring limbs and grasping items use nearby searches in configuration space.

Simple exhaustive searches are rarely sufficient for maximum actual-global problems: the hunt area (the quantity of places to go looking) speedy grows to astronomical numbers. The result is a seek that is too slow or never completes. The solution, for lots issues, is to apply "heuristics" or "regulations of thumb" that prioritize selections in want of these more likely to attain a aim and to accomplish that in a shorter variety of steps. In some seek methodologies heuristics also can serve to put off a few selections not going to result in a intention (referred to as "pruning the quest tree"). Heuristics supply this system with a "first-rate guess" for the path on which the solution lies. Heuristics restrict the look for solutions right into a smaller sample size.

|

| A particle swarm seeking the global minimum |

A very specific type of search got here to prominence within the Nineties, primarily based on the mathematical theory of optimization. For many troubles, it is viable to start the quest with some shape of a bet and then refine the wager incrementally till no greater refinements can be made. These algorithms may be visualized as blind hill climbing: we begin the search at a random point at the panorama, and then, by jumps or steps, we maintain transferring our wager uphill, till we attain the top. Other optimization algorithms are simulated annealing, beam search and random optimization. Evolutionary computation makes use of a shape of optimization seek. For example, they will begin with a populace of organisms (the guesses) and then allow them to mutate and recombine, choosing handiest the fittest to survive every era (refining the guesses). Classic evolutionary algorithms include genetic algorithms, gene expression programming, and genetic programming.

Alternatively, allotted seek approaches can coordinate via swarm intelligence algorithms. Two popular swarm algorithms utilized in search are particle swarm optimization (stimulated via chicken flocking) and ant colony optimization (inspired by using ant trails).

Logic

Logic is used for know-how representation and trouble solving, however it can be implemented to different troubles as nicely. For example, the satplan set of rules makes use of logic for making plans and inductive common sense programming is a way for mastering.

Several unique forms of common sense are utilized in AI studies. Propositional logic includes truth capabilities which includes "or" and "not". First-order logic adds quantifiers and predicates, and might express information approximately items, their houses, and their relations with each other. Fuzzy common sense assigns a "diploma of reality" (between 0 and 1) to indistinct statements including "Alice is vintage" (or wealthy, or tall, or hungry), that are too linguistically obscure to be absolutely actual or false. Default logics, non-monotonic logics and circumscription are kinds of logic designed to assist with default reasoning and the qualification problem. Several extensions of logic have been designed to address specific domain names of know-how, inclusive of: description logics; situation calculus, event calculus and fluent calculus (for representing events and time); causal calculus; belief calculus (belief revision); and modal logics. Logics to model contradictory or inconsistent statements springing up in multi-agent systems have also been designed, which includes paraconsistent logics.

Probabilistic methods for uncertain reasoning

|

| Expectation-maximization clustering of Old Faithful eruption data starts from a random guess however then correctly converges on an accurate clustering of the 2 physically distinct modes of eruption. |

Many troubles in AI (in reasoning, making plans, mastering, belief, and robotics) require the agent to operate with incomplete or uncertain information. AI researchers have devised some of effective equipment to remedy these troubles using techniques from opportunity idea and economics. Bayesian networks are a totally preferred tool that can be used for numerous problems: reasoning (the usage of the Bayesian inference algorithm), studying (using the expectancy-maximization algorithm), making plans (the usage of decision networks) and notion (the use of dynamic Bayesian networks).

Probabilistic algorithms can also be used for filtering, prediction, smoothing and locating explanations for streams of information, assisting perception systems to analyze strategies that occur over the years (e.G., hidden Markov fashions or Kalman filters).

A key concept from the science of economics is "software": a degree of the way precious something is to an intelligent agent. Precise mathematical tools were evolved that examine how an agent could make picks and plan, using selection idea, selection analysis, and statistics fee theory. These equipment consist of models which includes Markov selection techniques, dynamic decision networks, game principle and mechanism layout.

Classifiers and statistical learning methods

The only AI packages can be divided into types: classifiers ("if vivid then diamond") and controllers ("if diamond then select up"). Controllers do, however, additionally classify conditions before inferring moves, and consequently classification bureaucracy a vital a part of many AI systems. Classifiers are functions that use sample matching to decide a closest in shape. They can be tuned in keeping with examples, making them very appealing for use in AI. These examples are known as observations or patterns. In supervised studying, every pattern belongs to a positive predefined class. A magnificence is a choice that has to be made. All the observations blended with their class labels are referred to as a data set. When a new commentary is received, that observation is classified based totally on previous experience.

A classifier can be skilled in various approaches; there are many statistical and machine learning procedures. The choice tree is the simplest and most extensively used symbolic gadget learning algorithm. K-nearest neighbor set of rules become the maximum widely used analogical AI till the mid-Nineties. Kernel method which includes the guide vector device (SVM) displaced k-nearest neighbor inside the Nineteen Nineties. The naive Bayes classifier is reportedly the "maximum broadly used learner" at Google, due in component to its scalability. Neural networks also are used for classification.

Classifier overall performance depends substantially at the traits of the records to be labeled, which include the dataset size, distribution of samples across lessons, the dimensionality, and the level of noise. Model-primarily based classifiers perform nicely if the assumed version is an tremendous match for the actual data. Otherwise, if no matching model is to be had, and if accuracy (instead of speed or scalability) is the sole challenge, traditional awareness is that discriminative classifiers (especially SVM) have a tendency to be extra accurate than version-based totally classifiers which include "naive Bayes" on maximum realistic statistics units.

Artificial neural networks

|

| A neural community is an interconnected institution of nodes, comparable to the extensive community of neurons in the human brain. |

Neural networks had been inspired by using the architecture of neurons inside the human brain. A easy "neuron" N accepts enter from other neurons, every of which, when activated (or "fired"), casts a weighted "vote" for or towards whether neuron N have to itself set off. Learning calls for an set of rules to regulate those weights primarily based on the training records; one easy algorithm (dubbed "hearth collectively, twine together") is to boom the load between two related neurons while the activation of 1 triggers the successful activation of every other. Neurons have a continuous spectrum of activation; further, neurons can system inputs in a nonlinear manner instead of weighing truthful votes.

Modern neural networks model complicated relationships among inputs and outputs or and find patterns in data. They can study non-stop capabilities or even virtual logical operations. Neural networks may be viewed a type of mathematical optimization — they carry out a gradient descent on a multi-dimensional topology that was created by using education the community. The most not unusual training approach is the backpropagation set of rules. Other studying techniques for neural networks are Hebbian getting to know ("fireplace collectively, wire together"), GMDH or aggressive gaining knowledge of.

The principal classes of networks are acyclic or feedforward neural networks (in which the signal passes in best one path) and recurrent neural networks (which permit feedback and brief-time period reminiscences of preceding enter activities). Among the maximum popular feedforward networks are perceptrons, multi-layer perceptrons and radial foundation networks.

Deep learning

|

| Representing Images on Multiple Layers of Abstraction in Deep Learning |

Deep learning uses several layers of neurons among the network's inputs and outputs. The more than one layers can gradually extract higher-degree features from the uncooked enter. For instance, in image processing, lower layers may additionally pick out edges, whilst better layers may pick out the ideas applicable to a human along with digits or letters or faces. Deep mastering has significantly advanced the overall performance of packages in lots of important subfields of artificial intelligence, along with laptop imaginative and prescient, picture class and others.

Deep gaining knowledge of often uses convolutional neural networks for many or all of its layers. In a convolutional layer, every neuron gets enter from best a constrained vicinity of the preceding layer called the neuron's receptive field. This can extensively lessen the variety of weighted connections among neurons, and creates a hierarchy just like the organization of the animal visual cortex.

In a recurrent neural network the signal will propagate via a layer greater than as soon as; as a result, an RNN is an instance of deep gaining knowledge of. RNNs may be skilled by way of gradient descent, but lengthy-time period gradients which might be returned-propagated can "vanish" (that is, they are able to have a tendency to zero) or "explode" (this is, they can have a tendency to infinity), referred to as the vanishing gradient problem. The long short term memory (LSTM) method can prevent this in most cases.

Specialized languages and hardware

Specialized languages for synthetic intelligence had been advanced, consisting of Lisp, Prolog, TensorFlow and lots of others. Hardware advanced for AI includes AI accelerators and neuromorphic computing.

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home